Hello! Welcome to another issue of China Chatbot. This edition is going out to all of our subscribers for free. That’s because we want to tell you about a new way to join the Lingua Sinica community. We’re now offering a 20 percent discount for educators and students, which you can access here. At that price, it’s just US$4 a month or US$40 annually to enjoy all the benefits of paid membership — including early access to every China Chatbot bulletin and our monthly CMP Discourse Tracker. Of course, you’ll also be supporting the work we do.

In this issue, I take a look at the Party’s latest UN plan to win foreign friends through AI, query why China’s most elite AI scientists holding talks with their foreign counterparts is being ignored by state media, and take a deep dive into how the biggest companies in Chinese AI are teaming up to take on ChatGPT.

No more thoughts from me this week — let's cut straight to the chase. Enjoy!

Alex Colville (Researcher, China Media Project)

_IN_OUR_FEEDS(3):Chinese AI, Global Vision

At a UN meeting on September 25 with prominent representatives from 80 countries, Foreign Minister and Politburo member Wang Yi (王毅) launched China’s “AI Capacity Building and Inclusiveness Plan” (人工智能能力建设普惠计划). The document announced a raft of material benefits with which China is willing to deepen international cooperation. These include data sharing and security, production and supply of AI tech, building joint AI laboratories, education exchanges, and creating an “open source AI community.” The meeting earned coverage in Xinhua and the Global Times, with the People’s Daily covering the event on September 27 in their special “Harmony” (和音) column for the paper’s international commentaries. People’s Daily editors wrote the Plan was directed towards developing the Global South, reflecting China’s inclusive vision and “leading role in global AI development and governance.”

TL;DR: After Xi’s launch of the “Global AI Governance Initiative” last October, the Party has been making concrete moves to take the global lead in AI development and security cooperation, bringing a new conduit for influence and business in the Global South

Talks? What Talks?

For the second time, a dialogue where China’s most prominent AI scientists discuss AI safety with prominent international counterparts has received no coverage by Chinese state media. The talks, one part of a series of International Dialogues on AI Safety (IDAIS), took place at the start of this month in Venice. A former Chinese Vice-Minister of Foreign Affairs, along with elite scientists like Andrew Yao and Zeng Yi all contributed to a statement released on September 16 about AI risk. While this got column inches in The New York Times, Chinese outlets were silent. Key outlets like the People’s Daily and Xinhua didn’t write about the Beijing leg of the talks during the Two Sessions in March either, which also yielded coverage in international media. The handful of outlets that covered those talks — such as CGTN and Beijing Radio and Television (北京广播电视台) — stuck to terse bulletins, and implied they were a Chinese initiative rather than convened by an international group.

TL;DR: No matter how important the scientists are, or if their goals are aligned with Party policies, these talks would have to be Chinese-led and include a bigshot official to earn a splash, cf. Wang Yi’s UN announcement or the 2024 World AI Conference

It’s the Applications, Stupid

There are more signs the Chinese system is pivoting away from building Large Language Models (LLMs) as an end in itself, and toward putting these LLMs — the foundation of generative AI — to work. On September 20, a research group under Peking University released an “AI Development Index” ranking cities across China by key development metrics like patent applications, their percentage of the nation’s AI jobs and talent supply, and the number of AI education institutions. Beijing outstripped every city, leaving Shenzhen and Shanghai a distant second and third. On September 18, Suzhou released an Action Plan for how to integrate AI into a selection of different industries, joining a host of tech-heavy cities that have released similar plans. These include Beijing, Shenzhen and Foshan. On the same day, Beijing Daily (北京日报) reported that prominent figures at this year’s Bund Conference (a gathering of AI entrepreneurs and scientists in Shanghai) had agreed that “large models are "moving from 'competing for parameters' [how big they can get a model] to 'competing for applications' [finding uses for models in the real world]."

TL;DR: The bar for success is being raised beyond the “Hundred Model War”, and could involve harnessing the competitive streak of promotion-hungry officials

_EXPLAINER:Qihoo 360’s AI Assistant (360 AI助手)

What’s that?

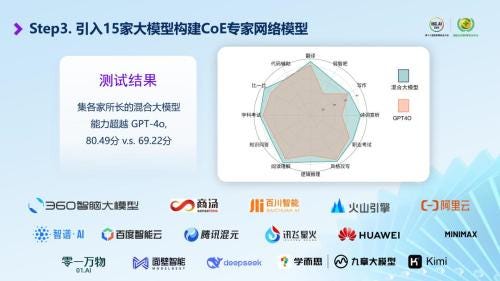

In August, one of China’s biggest software security companies (Qihoo 360) teamed up with 15 of the biggest home-grown Chinese LLMs, announcing that these 15 LLMs would be available in all Qihoo 360 products. The company put them all on a website for users to compare LLM answers, and also built them into Qihoo 360’s search engine, the models interacting with each other to create an optimized answer.

Huh, so who’s in this alliance?

Everyone who’s anyone. Giants like iFLYTEK, Tencent, Baidu, Alibaba and ByteDance, as well as big-name start-ups like Moonshot AI, MiniMax and 01.AI. One Chinese writer compared it to how The Avengers (just as popular in China as in the US) can only bring down the big boss, Thanos, by teaming up.

Who’s Thanos here?

Obviously ChatGPT, the colossus of the LLM universe. The idea (as per Qihoo 360’s chart in the slide below) is that each LLM has unique strengths. One may have been trained on medical data, while another may be really good at logical reasoning. None of them can out-perform ChatGPT on their own, but if they work together they could stand a chance.

How are they going to work together then?

Through what’s called a Mixture of Experts (MoE), where a set of LLMs are put into the same system and assigned to work on a question. The Economist reported last week on other Chinese companies like DeepSeek using the same concept. So when you ask a question on Qihoo 360’s search engine, multiple LLMs will work on the same problem, with one generating an answer, and two others then assessing and summarizing it.

What are they getting out of it?

If by “they” you mean the companies that built these LLMs, I’m not sure. The Chinese government is encouraging LLMs to be “open source,” meaning a model can be put into someone else’s project for free. Maybe they hope more users will interact with the software, buffing its reliability through extra training.

And what about Qihoo 360? What do they get out of it?

They won’t be getting much money, since there’s no paywall. It could be a way to drum up more customers for their products, or an experiment by their engineers to remain competitive with the latest US tech while getting around US chip sanctions and the limits of the scaling law.

Urgh please God make the jargon stop.

It’s ok, “scaling law” just means “more is more.” More data + bigger LLMs + better computing = smarter AI. But scientists are hitting the limits on how far this can be pushed without an overhaul. If they didn’t adapt, ChatGPT would get to the stage where it’d need more electricity than an entire nuclear reactor generates to power the newest gargantuan models. But it would still be nowhere near as complex as the human brain. This latter is highly fuel efficient, running on nothing but oxygen and whatever you’ve put in your stomach.

So Chinese scientists are trying to get around this?

Yes, and they’ve potentially gotten a head start. The US blocking Chinese tech from the latest chips limits the data, computing power and size of Chinese LLMs, forcing some companies to make the most of what they’ve got. So in July Qihoo 360’s chief scientists published a paper explaining their version of MoE model would harness the strengths of several LLMs, while also being more efficient, compact and powerful than them.

Ok, so it could be about boosting efficiency. Jargon over?

I promise.

Great. Any other payoffs to this alliance?

Getting different LLMs checking each other’s work could add extra security to search answers, weeding out the hallucinations and sensitive content that’s been bugging Chinese tech companies and Party censors alike. One article from a Chinese publicist asserts this mixture of experts “will significantly improve the accuracy and integrity of the answers.”

Does it?

Not from what I’m seeing, no. No matter how many LLMs are checking an answer, the data they’re being fed is crucial. During my testing (see below), Qihoo 360’s search engine contained a lot of factual errors after scanning poorly-researched articles on the internet.

Right. So there any downsides to banding together?

Yep, MoE has a big flaw: load imbalance.

What did I say about jargon?!!

Yeah, I lied. But you came this far and it’s nearly over. Load imbalance is a vicious circle where the same few LLMs keep getting chosen for tasks. That makes them more reliable through more training, which in turn makes them more likely to be chosen for tasks. Despite supposedly having at least 15 LLMs to choose from, Qihoo 360’s search engine used just three for every question I asked on a variety of different topics. I’m not 100% certain, but it’s possible Qihoo’s system is out of balance — like assembling the Avengers but then having Captain America, Iron Man and the Hulk take on Thanos while the others kick around on the sidelines.

_ONE_PROMPT_PROMPT:The internet is full of motivational quotes falsely attributed to famous historical people. Would Qihoo 360’s new screening process (see above), and the company’s general emphasis on stemming the flow of fake news, successfully sift these out?

I asked Qihoo 360’s new search engine to give me a list of (picking a name off the top of my British head) Winston Churchill’s most famous quotes:

A third of the quotes the first LLM — Alibaba’s “Tongyi Qianwen” (通义千问) — assigned to the victor of the Battle of Britain were presented as cast-iron fact but shouldn’t have been. But as scrupulously noted by the International Churchill Society (apparently that’s a thing), these quotes are false yet commonly pinned to him on the internet.

Qihoo 360’s own “Smart Brain” (智脑) LLM critiqued the answer but focused on the lack of analysis rather than fact-checking quotes.

In a final flourish, ByteDance’s “Beanbag” (豆包) summarized the list, but with no fact-checking either:

I also asked ChatGPT for a list of Churchill’s famous quotes, and it added a note if it found there was “no definitive proof” he said something he is famous for having said. It also successfully debunked bogus quotes:

It could be that these Chinese models score better in quotes from Chinese historical figures or in CCP rhetoric (especially this last). But if general accuracy is the goal, it’s still Thanos: 1, Avengers: 0.