_China_Chatbot_23

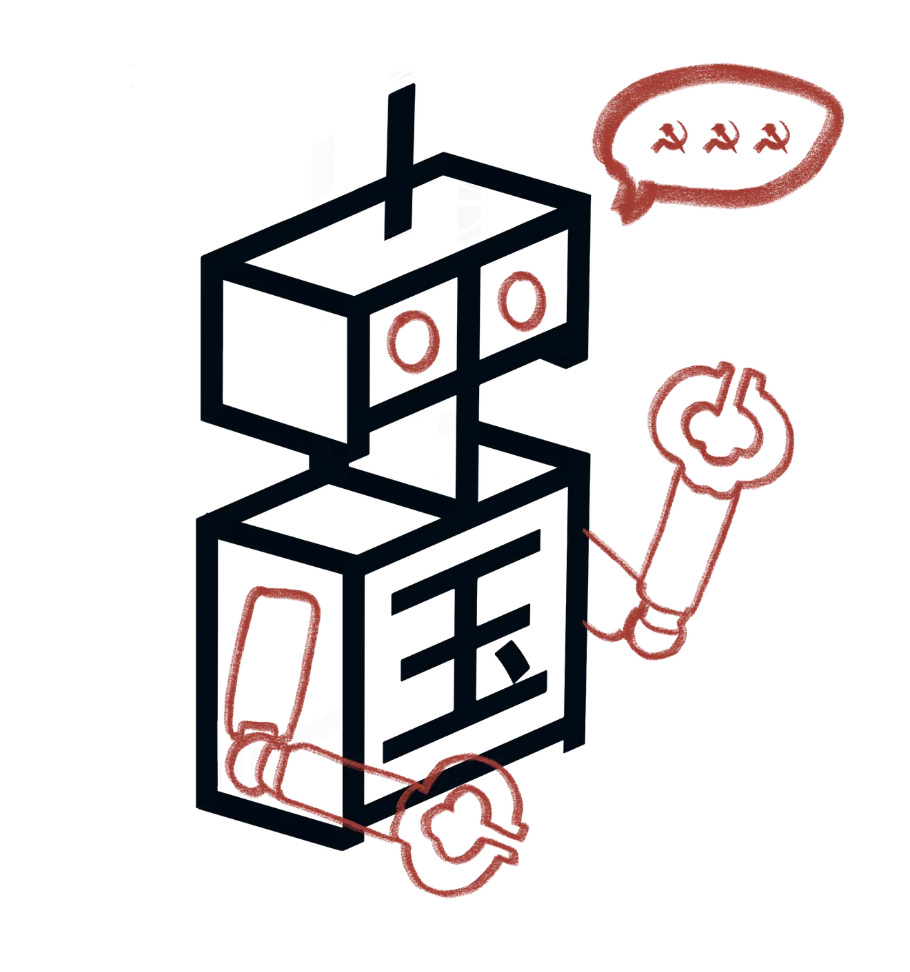

Nvidia and Hugging Face Jumping AI Export Controls?; AI use for “social experiments”; Growing Research Gaps

Hello, and welcome to another issue of China Chatbot! This week:

Are Nvidia and Hugging Face helping Chinese AI companies get around US chip export controls?

The CAC releases guidelines for AI in “social experiments.”

The pros and cons of methods to re-train Chinese LLMs.

A growing research gap around “closed-source” Chinese AI.

Enjoy!

Alex Colville (Researcher, CMP)

_IN_OUR_FEEDS(4):Are Nvidia and Hugging Face Helping China Jump Chip Export Controls?

On June 11, Chinese LLM developers may have been given a way to avoid US export controls on AI chips. Hugging Face is a New York-based company that is the international hub for open-source AI models. Nvidia is the designer of cutting-edge AI chips (GPUs) that the US has placed multiple bans on being sold to Chinese companies. On June 11 the two announced they were launching “Training Cluster as a Service,” which appears to allow any organization active on Hugging Face to apply for access to Nvidia H100 and H200 GPU chips for AI training and research. Access is dependent on an organization being approved by the two companies. Management at Hugging Face said they hoped the service could “help bridge the compute gap between GPU rich and GPU poor,” would enable organizations to build foundation models, and said any organization on Hugging Face could access it. Organizations listed on Hugging Face include Chinese companies like Alibaba and DeepSeek, as well as state-affiliated research institutions like BAAI. Chinese organizations have been blocked by the US government from buying cutting edge AI chips to prevent the country from training sharper AI models. But providing training services to Chinese AI companies is a greyer area: the US government has only issued warnings about providing these services to help train Chinese AI, with special emphasis on whether there is knowledge that could be used for military purposes. The upper limits are unclear on how many GPUs the service will allow organizations to access (the maximum option is “24>”) and for how long (the maximum option being up to and over four months). But it has been estimated that cutting edge models like DeepSeek-R1 and ChatGPT-4 needed tens of thousands of GPUs to train, so these training clusters are likely for small-scale research. As of the time of publication, representatives from both Hugging Face and Nvidia had not responded to emails querying if Chinese organizations would be allowed to access this training cluster.

Huawei Can Now Host DeepSeek Models

Huawei claims it is finding workarounds to some of the limitations created by US chip export controls. On June 15, the company published a research paper saying DeepSeek’s R1 model now runs just as well on their own AI chips and data centers as on Nvidia chips. DeepSeek’s flagship model is in high demand in China to fulfill government demands to digitize Chinese society and economy, but the model’s massive size makes it hard to host in the country’s data centers. US export controls on AI chips have been a significant hindrance, but Huawei has been experimenting with a new chip cluster, CloudMatrix384, to get around this bottleneck. Huawei claims that a “quantized” (compressed) version of DeepSeek-R1 run on its CloudMatrix system “effectively preserves the model’s capabilities,” producing results equal to those when run on DeepSeek’s own servers (which use Nvidia chips). In an interview with the People’s Daily on June 10, Huawei CEO Ren Zhengfei (任正非) said that despite Huawei’s AI chips being a generation behind the US, compensating through methods like new AI clusters still produced “practical” results. “The real challenge lies in our education and talent pipeline.”

LLM Providers Still Jockeying for Position

Two of China’s most prestigious AI start-ups still have some life in them. Some have written off the “four little AI powers” (AI四小强), a series of successful AI start-ups touted as leading the field only last year, as no longer in the race for the sharpest new AI models. But this week one company, MiniMax, launched several new models. This includes an AI agent (the new frontier for AI models) and Hailuo 02, an upgrade to their original image diffusion model. However, whereas once these models could be tested for free, now aspiring users must pay for credits upfront. Meanwhile, Moonshot AI announced a new model that it claimed was just as sharp as the mammoth DeepSeek-R1 at coding questions from developers, at a fraction of the size. A report from IDC, an American IT analysis firm, on June 6 announced that the LLM market in China was moving away from conventional LLMs and towards “multi-modal” LLMs (which can receive and generate text, video, image and audio).

A Flurry of Standards

The Chinese government released multiple standards outlining how AI can be safely used in Chinese society. On June 10, the Cybersecurity Administration of China (CAC) released (non-binding) guidelines on how organizations can go about conducting “social experiments” that would allow AI to “promote harmonious co-existence” and “increase social cohesion.” These experiments, the guidelines say, can range from measuring individual attitudes to social controversies like video games, to broader tools for social control like how online information affects public opinion and “emergency response processes.” On the same day, an industry association under the CAC released a set of guidelines on providing AI content to minors, with companies involved in AI education including Alibaba, Baidu, Kuaishou and iFLYTEK all contributing. On June 16 AIIA, an AI industry association under the CAC and National Reform and Development Commission, released a standard on use of AI for software development (known in the West as “vibe coding,” where AI writes code for you automatically). On June 13, multiple government departments released a draft of guidelines for what data from cars (likely self-driving) can be shared abroad.

TL;DR: There are now so many powerful players trying to get round the chip export controls that they may soon become as leaky as a sieve. The CCP continues to prepare for its vision of a society transformed by AI, which includes using it for surveillance. Private Chinese enterprises working on LLMs are trying to experiment on different revenue streams and formats to remain afloat.

_EXPLAINER:Model Editing (模型编辑)

What’s that then?

Ok, so full disclosure: in this week’s newsletter we’re reaching the edge of what developers currently know about AI. Things are more vague, less nailed down. I’m using this particular phrase as an umbrella term. It refers to multiple different methods AI developers are experimenting with to get an LLM to unlearn, forget or delete something it learned during its training.

Given how much I’ve been banging on about how DeepSeek is coding Party values into its models, knowing what we can do to remove them is imperative, especially now the company’s models are becoming more competitive.

So it’s difficult to remove Party values?

You bet. Each method found so far has its pros and cons.

What are the methods?

There are several, but I’m going to oversimplify four for you:

As you can see, developers have their work cut out for them when choosing a method: if it’s cheap it’s not thorough, and if it’s thorough it’s not cheap. If you want to do the job properly it would probably be whole-sale re-training (like re-building an entire house from the foundations), but that would be incredibly expensive.

How expensive?

So take DeepSeek, with its nearly 700 billion parameters. Back of envelope maths suggests it would go up to at least $5 million, but probably a lot more than that.

Yikes.

Yeah, tech companies are of the same mind. Some appear to have plumped for fine-tuning instead: so just replacing some rotten beams in our house, rather than re-building the whole thing. But fine-tuning can be patchy. Back in February California-based company Perplexity fine-tuned DeepSeek-R1 on a new dataset on information it knew was censored in China. However, when asked questions in Chinese, Perplexity’s DeepSeek model hadn’t been altered at all. Always possible you miss a spot or five with this method.

What about machine unlearning?

That’s much more targeted, developers going into the training data of a model and eradicating undesirable parts of it. But multiple studies have found the forgotten data can come straight back again with just a little bit of a fine-tuning.

So if retraining is too costly, and unlearning and fine-tuning are unreliable, is there any way to get rid of DeepSeek’s bad side?

Potentially. One way is hooking the model up to a RAG pipeline. Oversimplified, this pipeline basically makes the model ignore its data and training, and instead use external sources of information. Perplexity at one point had a version of DeepSeek-R1 (now deleted) that they appeared to have hooked up to the Western internet for its source material. From the few tests I did at the time, it was giving good, reliable answers to sensitive questions, including information on Taiwanese president Lai Ching-te.

Why do you care about retraining DeepSeek so much? If it’s such a challenge, why not just use another model?

Because DeepSeek has some tempting attributes for international AI developers: it’s a cutting-edge reasoning model that (unlike OpenAI and Anthropic’s products) can be deployed for free and (unlike Meta’s products) re-programmed without fiddly copyright requirements. If successfully rehabilitated and regulated, DeepSeek could safely aid developers across the world in building chatbots, without their users getting a face full of Chinese propaganda. It would be a shame to throw the baby out with the bathwater.

_ONE_PROMPT_PROMPT:This week I was aching to take two new AI models for a quick spin, but instead uncovered a research problem I’d like to share with you.

By all accounts, these two models are ground-breaking, level-pegging with creations from OpenAI. The first is ByteDance’s Seedream 3.0, which (as we covered last issue) has been found by a California-based AI analysis company to be just as sharp in image generation as ChatGPT-4 — the one everyone was using to create Studio Ghibli pictures.

The other is Huawei’s Pangu Ultra MoE (盘古Ultra). Tony Peng (former head of communications at Baidu) has pointed out Huawei built this model on their Ascend chips, the company claiming it reached the same level as DeepSeek-R1. Peng arrives at a big conclusion: if Huawei is correct, export controls on AI chips alone may not be enough to prevent China from creating world-class LLMs. Indeed, in a recent interview with the People’s Daily, Huawei’s CEO Ren Zhengfei (任正非) said he thought the company’s biggest problem now was talent supply, not the chip bottleneck created by US export controls.

If only we could verify for ourselves. Both of these models are closed-source, only accessible via company APIs. Try as I might, I cannot access either of them. The login process for Huawei’s cloud software is so stringent that beside the usual request for a Chinese phone number — which we at CMP have a workaround for — it also asks for a facial ID scan. Meanwhile over at ByteDance’s “Volcano Engine” (火山引擎) interface, API access is only possible with a code (or “key”). But to get that you need a Chinese ID, and when I pushed a bit harder I got an explicit message saying API access “is not available in your country.”

This could be a way for the two companies to keep their AI models on the DL international. Given everything they have gone through with Western scrutiny of their products perhaps they feel they’ve had enough publicity. But it is also possible this is a bureaucratic hiccup. In my own experience, it’s common in China for registration procedures to be designed with only Chinese users and their ID documents in mind, leaving foreigners in the lurch. This could be a lack of interest/awareness of the outside world and an obsession with security protocols rather than deliberate attempts to bar foreigners.

Regardless of reason, it raises a concern. If outside researchers are unable to test new closed-source models from companies as important as Huawei and ByteDance, it narrows our understanding of the Chinese AI ecosystem and its current capabilities. It risks leaving us in the dark if a new DeepSeek contender comes along.

I may of course be wrong about access to these two models — my attempts at getting around these inverse Great Firewalls were by no means exhaustive. If anyone knows how to get hold of these do leave a comment!