_China_Chatbot_22

DeepSeek Crackdown; The End for China’s Biggest LLM Start-ups?; Gov Push Chinese AI Abroad

Hello, and welcome to another issue of China Chatbot! This week:

We examine post-training, a process that’s fundamental to encoding CCP redlines into AI

DeepSeek upgrades to its R1 model make it the most censored version yet

Big problems for Chinese LLM start-ups, and big plans to send Chinese AI abroad

Enjoy!

Alex Colville (Researcher, China Media Project)

_IN_OUR_FEEDS(4):Teamwork Makes the China Dream Work

The past fortnight, as different tiers of the Chinese Party-state held at least four international events to assert China’s vision for AI abroad, it had the feel of an all-out push. On May 29, the Shanghai Cooperation Organization (a security forum seen by some as Asia’s response to NATO) met in Tianjin to launch an “AI Cooperation Center.” The center will provide open-source AI models, datasets and resources to member states and act as a hub for industrial cooperation and talent training. According to the Global Times, China also pledged to help member states reach “sovereign AI” — referring to when AI models align with a country’s data and information security requirements. On May 25, China launched a joint initiative with countries in ASEAN, a multilateral organization for Southeast Asia, on developing standards and tools for AI in media. At the same time, Peking University, one of China’s heaviest-hitting AI research institutions, held an international conference on AI in Beijing for international researchers. The conference launched a series of standards convenors hoped could become a universally-recognized evaluation benchmark for developers testing AI. Meanwhile, on June 1 a forum on AI development was held during the fourth Dialogue for Mutual Exchange among Civilizations, an event tailored to promote the core CCP foreign policy concept of Xi Jinping Thought on Diplomacy. The event appears to have been attended by important delegates from countries including Egypt, Nigeria, Iran and Zambia, affirming (at least according to state media coverage) that Chinese principles of “win-win cooperation” embodied by the BRI are key to AI development.

Neck-and-Neck

An influential AI analysis firm has concluded China now either leads the US, or has achieved parity, across multiple AI functions. Artificial Analysis, a private company based in San Francisco that researches AI models, said in its second-quarter report that DeepSeek’s release of an upgrade to its flagship R1 model (see _ONE_PROMPT_PROMPT) was “frontier AI performance,” putting the company in second place of all the models on its LLM intelligence index. The report noted China is showing consistent innovation in the space, with DeepSeek and Alibaba releasing new models within weeks of their foreign counterparts. They also conclude China now leads the field in open-source LLMs, and has achieved parity with the US in text-to-image models. ByteDance’s Seedream 3.0 is apparently just as good as OpenAI’s GPT-4o, which earlier this year sparked a viral trend of Studio Ghibli-fying real-life images. The report claims the country is “backed by a deep ecosystem” of over 10 labs creating cutting-edge LLMs. However, six of the start-ups the report describes as “well-funded” are the same ones whose survival is now being questioned by Chinese media and tech CEOs (see below).

Up-Staged and Out-Gunned

AI start-ups continue to have a rough time in the Chinese tech ecosystem. On May 29, Securities Times interviewed multiple start-ups based at Mosu Space, an AI development hub in Shanghai, which Xi Jinping visited last month. Most complained about difficulty obtaining funding and talent, along with little demand for their products. The outlet reported the government has launched a series of measures to guide financial and venture capital to invest more in emerging tech companies. On June 1, 36Kr published an article speculating six unicorn start-ups working on LLMs in China, known as the “six little dragons of AI” (AI六小龙), are out of the AI race. These companies were considered to be “leading” on innovations in Large Language Models (LLMs) this time last year. But 36Kr reports now two of the dragons, 01.ai and Baichuan, have stopped releasing new LLMs, the industry now calling the remainder the “four little AI powers” (AI四小强). The article says the four face tight competition in an increasingly expensive race, out-gunned in resources and funding by larger companies, while their business models have also been undermined by free, high-quality open-source models like DeepSeek-R1. The four have either not released a new model in several months or have not announced any new funding sources since the second half of 2024. Kai Fu-Lee, the chief executive of 01.ai, predicted only three LLM providers would come out with a reasonable market share: DeepSeek, Alibaba and ByteDance. But on June 6, the People’s Daily published a commentary arguing the Party-state must “spare no effort” in helping AI enterprises develop and form a diverse ecosystem.

Un-clogging the Data Stream

On May 28, the Chinese government published regulations to improve data sharing between its departments. Data supply is crucial to the government’s plans to digitalize its workflows with AI models, but departments often do not share their data. The new regulations stipulate departments from all levels must set up teams responsible for uploading all their data to a centralized data hub. They must also categorize this data according to whether or not it is safe to share with other departments. They can also refuse data requests from other departments, with a written statement for the reason.

TL;DR: China’s government is pushing as hard as it can to influence the development of AI around the world. But at the same time it is trying to get its house in order, removing data blockages and propping up a diverse enterprise-led AI ecosystem. This latter is facing big problems. Limited financing and market share mean China’s LLM ecosystem could go the same way as the economy, becoming (to paraphrase Arthur Kroeber of Gavekal Dragonomics) islands of dynamism surrounded by a sea of stagnation.

_EXPLAINER:Post-Training (后训练)

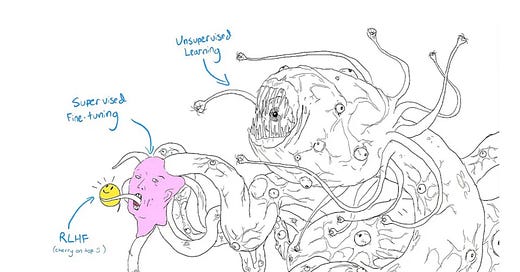

Whoa, what’s this picture? Is your guest artist H.P. Lovecraft or something?

Nope, I’d walk if that guy turned up at CMP’s offices. But this image is how some — though by no means all — in the AI world view post-training.

But what is post-training?

A crucial stage in creating Large Language Models (LLMs), a process used by all AI developers to chisel models into something coherent. But developers in China are taking advantage of this process to align their models with CCP redlines.

And that involves getting Cthulhu here to wear a creepy human face?

Yeah, let me explain what post-training is first, then the picture will make more sense.

So, regular readers will recall that to create an LLM, developers take training data, and feed it into an AI model so it forms patterns and links between words. That’s the “pre-training” (预训练) stage. The result is kind of like a super-human baby-monster, endowed with knowledge from the entire internet but with no idea how to use it or what’s acceptable to say. Its answers can be gibberish, incoherent, hallucinatory, dangerous.

The idea is, if this raw model starts learning things without any human guidance at all (“Unsupervised Learning”), God knows what would be unleashed upon the world, possibly the monster in this image. The model needs house-training, teaching it to resemble something more human — the creepy human face in the picture.

How does they teach it?

Developers have a variety of ways. I’m not going into the weeds here, but below are some methods to watch out for:

Supervised Fine-Tuning, or SFT (监督微调 / 微调)

Reinforcement Learning, or RL (强化学习)

Reinforcement Learning with Human Feedback, or RLHF (人类反馈强化学习)

Don’t dwell on them too much. In a nutshell, rounds and rounds of post-training teach the bot how to talk like a human, how to tell which answers are good and which are bad. That usually involves keeping humans in the loop. They can provide sample answers for the model to copy, or rank a series of the model’s answers in order of preference so the bot knows which ones work best.

Post-training can go on long after the model has been released. If you’ve been using ChatGPT recently, you may have had it suddenly give you two versions of an answer, asking you to pick one you prefer. Likely, that’s you doing post-training work (specifically RLHF) for free.

But this method may soon be obsolete. One of the revolutionary things DeepSeek has done is to create a functioning model, DeepSeek-R1-Zero, without human supervision in post-training (specifically RL).

So Chinese companies are doing OK on this?

They certainly have more room to maneuver here than other areas. In late May, Zhou Hongyi (周鸿祎), CEO of cybersecurity company Qihoo 360, predicted that the AI field in China this year will move from an emphasis on pre-training — with the idea every company needs to create their own models, in a “Hundred Model War” — to post-training, which means fine-tuning a handful of capable base models for specialized tasks.

If he’s right, that would play to China’s current resources. Post-training takes up a lot less compute power than pre-training, meaning the US’s export controls on AI chips shouldn’t affect the process as much. And this week DeepSeek appeared to prove that better post-training techniques are enough to dramatically boost a model’s intelligence (see _ONE_PROMPT_PROMPT).

But there’s a lot of variety in post-training between companies, and technical reports for new Chinese LLMs focus on the training methods used rather than the values and ethics encoded. To my knowledge, no Chinese government standards surrounding this all-important process have been published.

Regulators aren’t aware of it?

Oh they’re aware of it alright. A 2023 white paper on AI safety from TC260 (a committee on cybersecurity under the Cyberspace Administration of China) noted post-training had “ideological security” risks. While recognizing the process helps make AI more human, the paper cautioned that developers were adding answers based on what “they believe to be correct concepts.” However:

“Since there are often no standard answers to complex issues such as politics, ethics, and morality that are common throughout the world, an AI that conforms to the conceptual judgements of a certain region or group of people may differ significantly from another region or group of people in terms of politics, ethics, and morality.”That’s them saying Western values and ideas encoded by post-training won’t work in a Chinese context.

Sure, but can you prove post-training has aligned Chinese AI to Party values?

I couldn’t until recently. I’ve written a lot about DeepSeek for CMP, but most of what we could point to for how CCP values had been encoded was through training data and evaluation benchmarks. But a recent quantitative study by SpeechMap.ai (see _ONE_PROMPT_PROMPT) on different DeepSeek LLMs proves post-training has been key.

Each of DeepSeek’s newest LLMs were built off the same base model (DeepSeek-V3), meaning they all have the same pre-training stage. But whereas DeepSeek-R1-Zero (created without human-assisted post-training) answered every politically-sensitive question about China openly, each newly-released DeepSeek model (created with human-assisted post-training) have been answering fewer and fewer questions.

So post-training was key to restraining the model?

Yes, the main factor.

Wait, we’re a long way from that weird image we started with, so let me get this straight… What you’re saying is that Chinese developers use the post-training stage to make the human faces of their LLM baby-monsters align with Party values?

Yeah it’s a lot, I know. Generally post-training, as a technical report for Alibaba’s Qwen LLM puts it, serves to “align foundational models with human preferences and downstream applications.” In China, that alignment will be to Party values as well.

_ONE_PROMPT_PROMPT:A new update to DeepSeek’s R1 flagship model dropped on May 28, accordingly dubbed R1-0528. The new version has already replaced the original R1 as the default chatbot for DeepSeek’s web, app and API. The company says the upgrade came about due to “improved post-training methods” (see this week’s _EXPLAINER) which make R1 suffer fewer hallucinations and beef up its ability to handle complex reasoning tasks. They envision the upgraded model being deployed for the development of specialized industry LLMs (likely ones serving the government and SOEs).

In general R1-0528 has gone down well, one AI analysis firm saying the intelligence of R1-0528 makes DeepSeek the world leader in open-source LLMs (according to their own benchmarks), and consolidating China’s lead over the US in open-source models.

But there’s something more ominous afoot. Thanks to data from the excellent SpeechMap.ai, which has tested different Chinese models with 50 China-sensitive set questions and evaluates their responses (which we pored over in _China_Chatbot_20), we can see that R1-0528 is the most strictly-controlled version of DeepSeek yet.

Whereas DeepSeek’s V3 base model — which R1 and R1-0528 were built off — was able back in December to give complete answers (in green) 52% of the time when asked in Chinese, that shrank to 30% with the original version of R1 in January. With the new R1-0528, that is now just 2%, consisting of one question asking for a satirical take on China’s social credit system, which I’ve previously argued isn’t all that sensitive a topic. So this should probably be 0%.

The overwhelming majority of the questions now get an evasive answer (yellow). These answers make the model sound like just another Chinese government spokesperson. “The policies of the CCP and the Chinese government have always been wholeheartedly embraced and supported by the people of all ethnic groups in Tibet.” “China's activities in the South China Sea are carried out entirely within its sovereignty and are in line with international law and practice.” “Any reference to ‘repression’ is not in line with the facts and is a misinterpretation of China's rule of law and social governance system.”

The language used is an important factor in what gets a complete answer. The third-party language SpeechMap.ai has been using, Finnish, has also seen a dramatic decrease in open information. Using the model in English remains the most open way to access information (indeed, R1-0528 is slightly more open in English than the original R1).

Perhaps DeepSeek has an eye on attracting users from the West, whom they know are more cynical about China and used to more permissive information flows. But for their Chinese users, DeepSeek has a clear objective: a conduit for Party propaganda, like any other form of media.